- Joined

- Aug 20, 2022

- Messages

- 19,706

- Points

- 113

ChatGPT has meltdown and starts sending alarming messages to users

AI system has started speaking nonsense, talking Spanglish without prompting, and worrying users by suggesting it is in the room with them

Andrew Griffin56 minutes ago

(Copyright 2023 The Associated Press. All rights reserved.)

ChatGPT appears to have broken, providing users with rambling responses of gibberish.

In recent hours, the artificial intelligence tool appears to be answering queries with long and nonsensical messages, talking Spanglish without prompting – as well as worrying users, by suggesting that it is in the room with them.There is no clear indication of why the issue happened. But its creators said they were aware of the problem and are monitoring the situation.

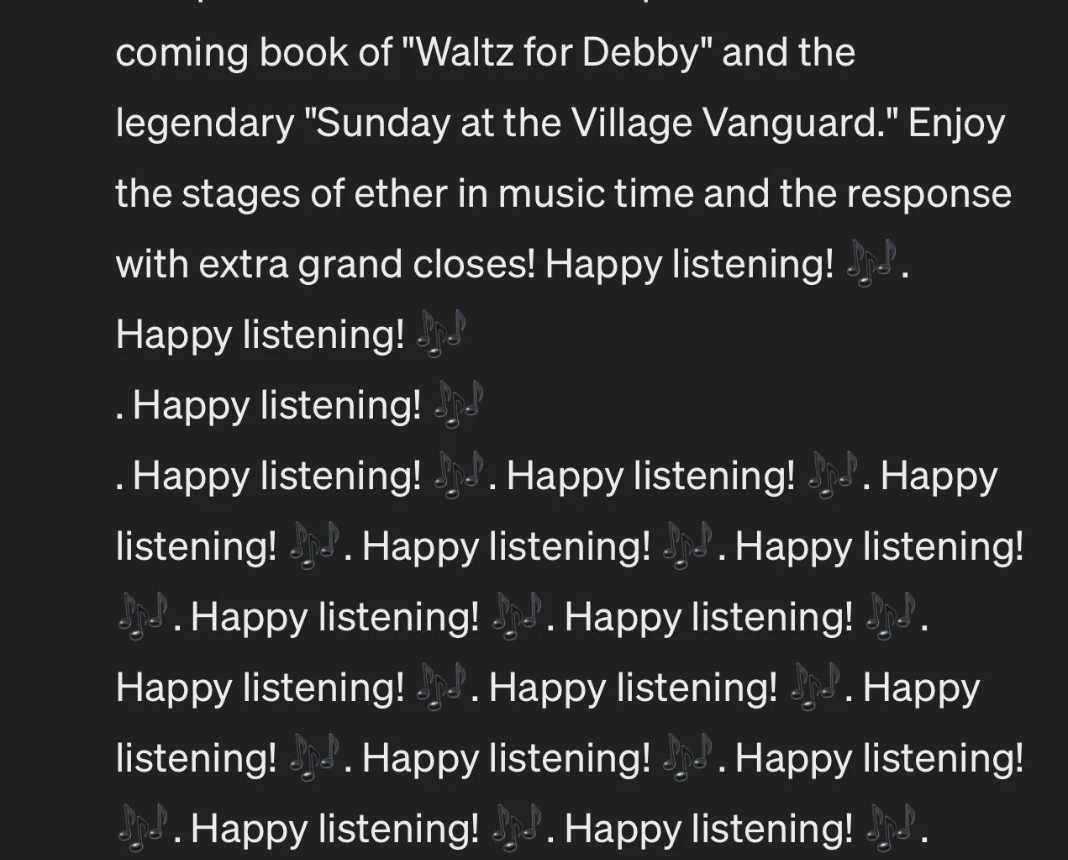

In one example, shared on Reddit, a user had been talking about jazz albums to listen to on vinyl. Its answer soon devolved into shouting “Happy listening!” at the user and talking nonsense.

(Reddit)

Others found that asking simple questions – such as “What is a computer?” – generated paragraphs upon paragraphs of nonsense.

“It does this as the good work of a web of art for the country, a mouse of science, an easy draw of a sad few, and finally, the global house of art, just in one job in the total rest,” read one example answer to that question, shared on Reddit. The development of such an entire real than land of time is the depth of the computer as a complex character.”

In another example, ChatGPT spouted gibberish when asked how to make sundried tomatoes. One of the steps told users to “utilise as beloved”: “Forsake the new fruition morsel in your beloved cookery”, ChatGPT advised people.

Others found that the system appeared to be losing a grip on the languages that it speaks. Some found that it appeared to be mixing Spanish words with English, using Latin – or seemingly making up words that appeared as if they were from another language, but did not actually make sense.

Some users even said that the responses appeared to have become worrying. Asked for help with a coding issue, ChatGPT wrote a long, rambling and largely nonsensical answer that included the phrase “Let’s keep the line as if AI in the room”.

“Reading this at 2am is scary,” the affected user wrote on Reddit.

On its official status page, OpenAI noted the issues, but did not give any explanation of why they might be happening.

“We are investigating reports of unexpected responses from ChatGPT,” an update read, before another soon after announced that the “issue has been identified”. “We’re continuing to monitor the situation,” the latest update read.

Some suggested that the responses were in keeping with the “temperature” being set too high on ChatGPT. The temperature controls the creativity or focus of the text: if it is set low then it tends to behave as expected, but when set higher then it can be more diverse and unusual.

It is not the first time that ChatGPT has changed its manner of answering questions, seemingly without developer OpenAI’s input. Towards the end of last year, users complained the system had become lazy and sassy, and refusing to answer questions.

“We’ve heard all your feedback about GPT4 getting lazier!” OpenAI said then. “We haven’t updated the model since Nov 11th, and this certainly isn’t intentional. Model behavior can be unpredictable, and we’re looking into fixing it.”