Tik Tok will also eventually filter out contents that spread misinformation as well...

TikTok to flag and downrank ‘unsubstantiated’ claims fact checkers can’t verify

Sarah Perez@sarahintampa / 9:00 PM GMT+8•February 3, 2021

Comment

Image Credits: TikTok

TikTok this morning

announced a new feature that aims to combat the spread of misinformation on its platform. In addition to removing videos that are identified to be spreading false information, as verified by fact-checking partners, the company says it will now also flag videos where fact checks are inconclusive. These videos may also become ineligible for promotion into anyone’s For You page, TikTok notes.

The new feature will first launch in the U.S. and Canada, but will become globally available in the “coming weeks.”

The company explains that fact checkers aren’t always able to verify the information being reported in users’ videos. This could be because the fact check is inconclusive or can’t be immediately confirmed, such as in the case of “unfolding events.” (The recent storming of the U.S. Capitol comes to mind as an “unfolding event” that led to a surge of social media posts, only some of which were able to be quickly and accurately fact-checked.)

TikTok today works with partner fact checkers to help the company determine which videos are sharing misinformation. In the U.S., its partners include PolitiFact, Lead Stories and SciVerify, which work to assess the accuracy of content in areas related to civic processes, like elections, as well as health (e.g. COVID-19, vaccines), climate and more.

Internationally, TikTok works with Agence France-Presse (AFP), Animal Político, Estadão Verifica, Lead Stories, Logically, Newtral, Pagella Politica, PolitiFact, SciVerify and Teyit.

Typically, TikTok’s internal investigation and moderation team works to first verify misinformation using readily available information, like existing public fact checks. If it can’t do so, it will send the video to a fact-checking partner. If the fact check determines content is false, disinformation, manipulated media or anything else that violates TikTok’s

misinformation policy, it’s simply removed.

These fact checks can be returned in as fast as one hour and most happen within less than one day, TikTok tells TechCrunch.

But going forward, if the fact checker can’t confirm the accuracy of the video’s content, it will be flagged as unsubstantiated content instead.

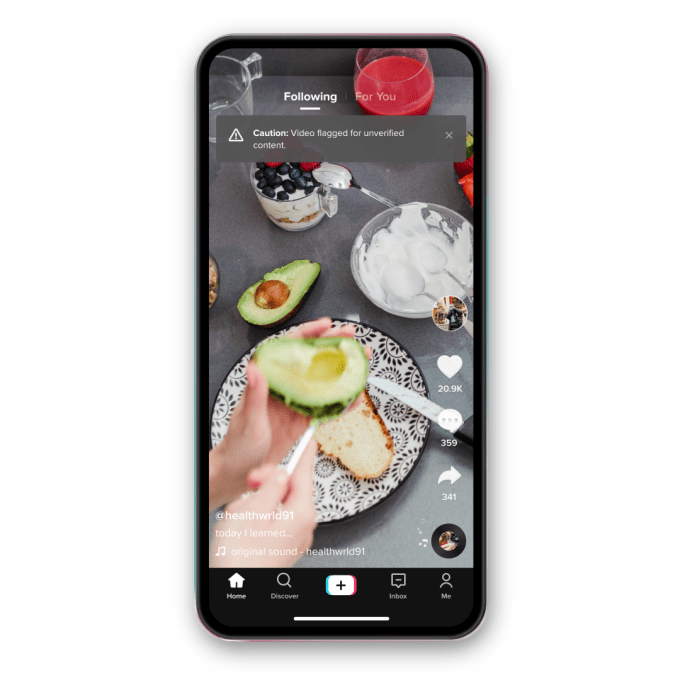

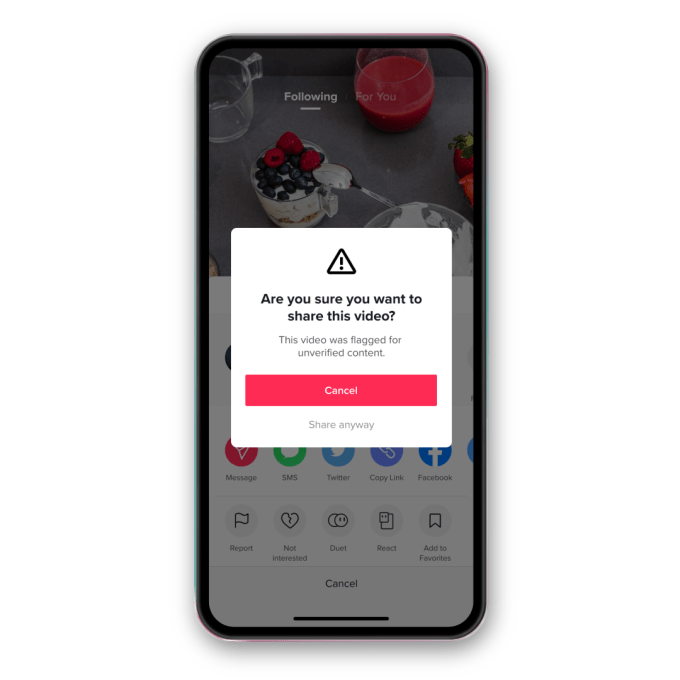

Image Credits: TikTok

A viewer who comes across one of these flagged videos will see a banner that says the content has been reviewed but can’t be conclusively validated. Unlike

the COVID-19 banner, which appears at the bottom of the video, this new banner is more prominently overlaid across the video at the top of screen.

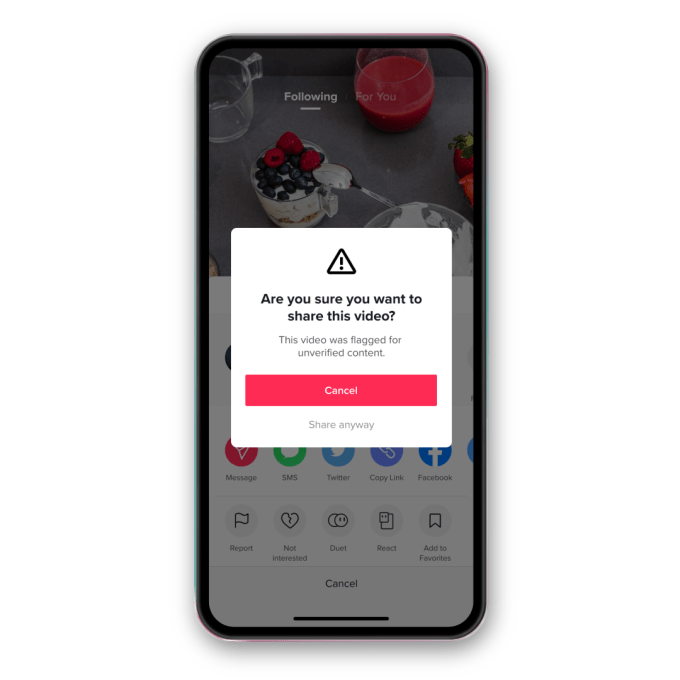

If the user then tries to share that flagged video, they’ll receive a prompt that reminds them the video has been flagged as unverified content. This additional step is meant to give the user a moment to pause and reconsider their actions. They’ll then need to choose whether to click the brightly colored “Cancel” button or the unhighlighted choice, “Share anyway.”

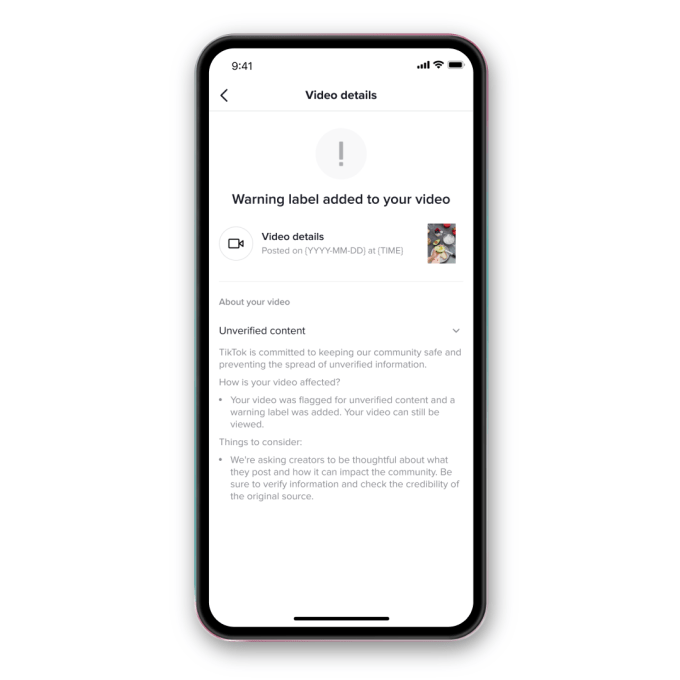

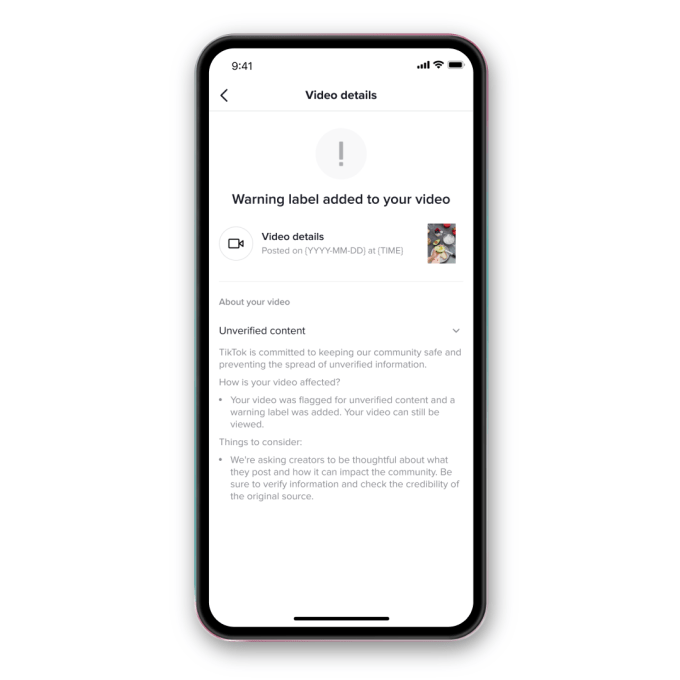

Image Credits: TikTok

The video’s original creator will also be alerted if their video is flagged as unverified content.

TikTok said it tested this labeling system in the U.S. late last year and found that viewers decreased the rate at which they shared videos by 24%. It also found that “likes” on unsubstantiated content decreased by 7%.

This system itself isn’t all that different from efforts made at other social networks to reduce the sharing of false content. For example, Facebook

now labels misinformation after it’s reviewed by fact-checking partners and determined to be false. It also notifies people before they try to share the information and downranks the content so it appears lower in users’ News Feeds.

Twitter, too, uses a labeling system to identify misinformation and discourage sharing.

But on other platforms, only verifiably false information is labeled as such. TikTok’s new system moves to tackle the viral spread of unverified content, as well.

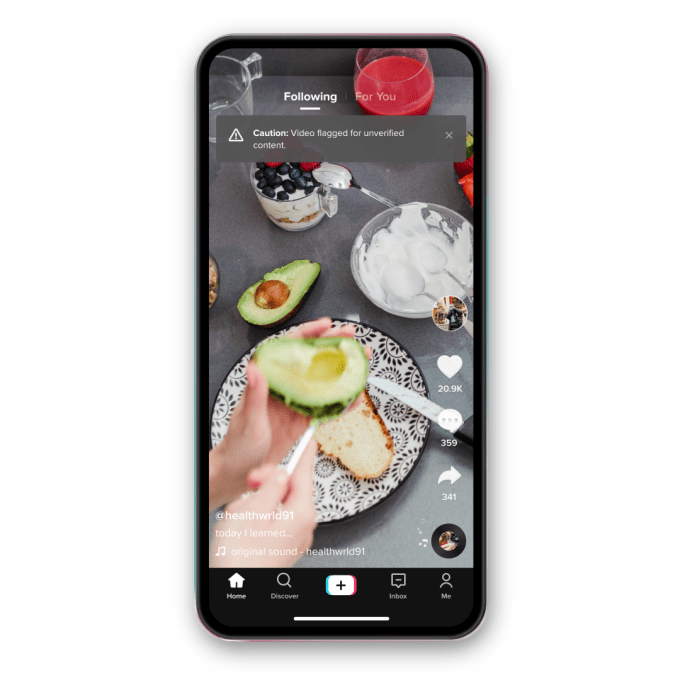

Image Credits: TikTok

That doesn’t mean users won’t see the videos. If someone follows the account, they could still see the flagged video in their Following feed or by visiting the account profile directly.

But TikTok believes the new system will encourage users to “be mindful about what they share.”

It could also potentially defer people from making vague but incendiary claims meant to draw viewers and attention. Knowing that these videos could be downranked to the point that they may not ever reach the “For You” page could have a dampening effect on a certain type of social media content — the kind that comes from creators who post first, then ask questions later. Or those who largely shrug their shoulders over the impact of their rumors.

The new feature was designed and tested with

Irrational Labs, a behavioral science lab that uses the psychology of decision-making to develop solutions that aim to drive positive user behavior changes. TikTok also says the addition was a part of its ongoing work to advance media literacy, which had included the “Be Informed”

educational videos it created in partnership with the National Association of Media Literacy Education.

The banner will begin appearing in the U.S. and Canada starting today.